Frequently Asked Questions

What is ReviewMeta all about?

We are an independent site that helps consumers get a better understanding about the reviews they are reading on various platforms such as Amazon and Bodybuilding.com. We examine reviews on the platforms, and then create a report for each product by running an analysis on them. Our reports help inform you about the origin and nature of the reviews for that product. See some of the best and worst reports here, or you can search for any product that has been reviewed on Amazon or Bodybuilding.com

Why don’t the platforms simply delete the reviews that look suspicious?

It isn’t quite that simple. Platforms such as Amazon and Bodybuilding.com are doing their absolute best to make sure their reviews are as honest as possible, but it’s not an easy task. They are constantly deleting overtly fake reviews, however they need to be extremely certain that a review is fake before they delete it, which can often be hard or impossible to prove.

Because ReviewMeta isn’t tasked with the enormous responsibility of detecting AND deleting suspicious reviews, we are much more aggressive about devaluing low-quality reviews. We understand that some honest reviews may get devalued using our approach. But if an honest review gets flat-out deleted by Amazon, it’s a lot more detrimental than ReviewMeta simply devaluing it: we aren’t silencing anyone’s honest opinion. Our method is just an estimate and we are by no means claiming it is 100% accurate. We simply offer an analysis of the existing reviews and our independent interpretation of those results.

So should I ignore the reviews on Amazon and just look at ReviewMeta?

Absolutely not! Review platforms like Amazon and Bodybuilding.com have done an amazing job at gathering hundreds of millions of datapoints of customer feedback on the products that they sell. They are an absolute necessity in helping customers navigate the millions of products available to them. Unfortunately, many brands have chosen to abuse the platforms that were created to help customers, flooding them with low quality and biased reviews to try and boost their own profits.

Think of ReviewMeta as a supplement to the reviews on Amazon and Bodybuilding.com. You should always start there, then check our reports if you want some deeper insights. We are definitely NOT a substitute for these review platforms, rather a tool to help improve your experience with them.

How can you tell if the reviews are fake?

First off, you’ll notice that we try not to use the word “fake” on our site. This is because there’s no practical way for us to determine, with 100% certainty, whether a review is fake or not. Our analysis checks for several patterns that may indicate whether or not the reviews are naturally occurring.

What do you mean by “Naturally Occurring Reviews”?

In a perfect world, reviewers would leave honest feedback on products based solely on the merits of the product. Their motive for writing reviews would be purely altruistic: to help their fellow customers. If a product has only natural reviews, you’d expect to see a diverse sample of reviewers. You’d see some newer reviewers and some experienced reviewers.You’d see short reviews, some long reviews and some reviews in the middle.You’d expect them to appear evenly over the life of the product, and not just on a few different days.

At ReviewMeta we expect reviews to be natural: they should have predictable patterns that we can easily identify. Since we know what natural reviews look like, it is easy for us to find clues that indicate that reviews might be unnatural. Whether they are straight up fake, written by the rep, or otherwise hand-selected, it still shows signs of manipulation, likely from someone with a vested interest in seeing the product succeed, blurring the lines of honest customer feedback and a sales pitch.

You can read more about unnatural reviews here.

How do you calculate the ReviewMeta Adjusted Rating?

The ReviewMeta Adjusted Rating is the average product rating that you get after we remove the reviews that we determine to be unnatural . You can read more about the ReviewMeta Adjusted Rating here.

Wait a second… How do we know you’re not accepting backdoor bribes from brands to artificially “pass” some products and “fail” some others? How can we trust ReviewMeta?

This is a great question, and we don’t blame your skepticism. You’re here because you already have a healthy level of distrust in what you read on the internet, and we’re just another anonymous voice.

However, the great thing about our service is that you can double-check our numbers. The majority of the figures we show on our site are easy calculations based on what we see directly on the reviewing platforms. We also provide you with an abundance of sample reviews so you can examine them on your own and make your own decision.

Most importantly, allowing brands to pay to be treated differently completely undermines the entire mission of the site.

If a product fails, does that mean there are definitely fake reviews?

No, it would not be correct to assume this. And again, we don’t like to use the word “fake”.

A product will fail because the adjusted rating is much lower than the original rating, or because a large chunk of reviews were devalued, or both. But we suggest that you don’t rely on our adjusted rating alone – make sure to read our report thoroughly and check the sample reviews before making a determination on your own.

Furthermore, keep in mind that amazing products sometimes have unnatural reviews. Building up reviews is a very well known aspect of selling products on Amazon, and many quality brands have had to get their hands dirty in order to stay competitive.

Where does RevewMeta get the data?

We’re getting the data from the same place as you: the publicly available web pages that contain the reviews.

By showing such detailed reports, aren’t you just giving brands detailed instructions on how to defeat the system?

We’ve definitely considered this, however we’ve chosen to show as much detail as we can for the following reasons:

- Transparency – we want our visitors to know what’s going on behind the scenes so they trust our insights.

- Education – part of the purpose of the site is helping educate consumers about how our formula works and why some reviews are potentially unnatural.

- Subjectivity – not everyone believes in all the criteria we use. For example, some of our users believe that unverified purchase reviews are no less trustworthy than verified purchases. These users can click “View/Edit Adjustment” on any report and recalculate the adjusted rating based on their own personal preference.

- The threat of brands knowing how to “cheat the system” isn’t going to do them much good. Manufacturing reviews that don’t raise red flags on our site will be MUCH harder to do, takes exponentially more time and energy and ultimately isn’t something that many brands will bother with.

I noticed a product that either fails/passes when it definitely shouldn’t!

Our algorithms aren’t going to be correct 100% of the time: it’s simply not possible. However, we are constantly reviewing our data to ensure the highest possible accuracy. There’s a few things to consider if you’re not feeling an analysis:

- Are you looking at a full report or just a preliminary report? Our full analysis has considerably more data so it might be worth adding your email and getting it queued up for a full analysis.

- Just because the ratings are “natural” or “unnatural” doesn’t mean that the product is good or bad. We’re only able to look for suspicious patterns in the data, not make an assessment as to whether or not you’ll like the product.

- If a textbook “snake-oil” product passes, consider this: it’s impossible to really weed out the placebo effect. Some honest reviewers might buy into the hype or drink the cool-aid, or hop on the bandwagon or whatever you want to call it. They honestly have bought the product, used it and honestly think it works. Since their review is “honest” it won’t get detected by our system. We still haven’t figured out a way to automatically detect stupidity.

- Some items are highly subjective (media, books, movies, etc). Just because you thought a product was terrible doesn’t necessarily mean that everyone else shares your opinion and any review to the contrary are automatically fake.

After taking those points into consideration, if you still don’t agree with a report, please contact us and let us know the URL to the report on our site, along with why you believe the report to be inaccurate. Keep in mind that we will never make individual changes on reports but will take your comments into consideration when tweaking our algorithms.

How does the site make money?

We have banner ads on our site to help recover some of the high costs of running the servers.

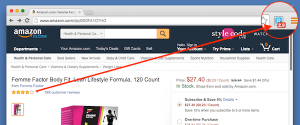

Your service is awesome! How can I browse products without having to manually look up each report?

Glad you asked! Check out our extensions that plug right into your browser and quickly shows you our adjusted score without having to search our site. Want more info? Just click the extension button and it will open the full report on our site in a new window!

Best of all, it’s free!

Perhaps I am overlooking the obvious, but I cannot see where to contribute a few farthings to the ReviewMeta cause. I try to make small donations to those extensions that enhance my viability as a consumer or to the developer whose brainchild enriched my life in some way.

However, when I click on “Donate,” it brings me to the AIDS bicycle ride – which is fine – but I want to contribute directly to ReviewMeta at this time.

What am I missing? (Be specific: I have an adversarial relationship with technology, so I would appreciate an A-B-C directive. Note: am not on social media, either.)

And I guess I’d be a sucky journalist, too, as I have buried my lede:

“REVIEW META ROCKS!!!”

Such a great extension! By assessing the viability of a product’s reviews, I have felt much more solid in my purchases since adding ReviewMeta to my repertoire 3 years ago. There is so much competition on Amazon that I have become especially mindful of where my consumer dollars go. I feel obliged to get it right for both of us.

In the end, I am a 70-year-old consumer with limited spare cash in my jeans who can’t get out anymore (even pre-COVID-19), and who is psychologically unable to return poorly chosen material goods.

(Logic doesn’t apply here.)

But I know my audience and I am sure of my own taste. So missteps have always been limited. Now they are obsolete.

Unless I somehow goof up the sizing somewhere, virtually all my Amazon purchases have been a slam-dunk since I discovered ReviewMeta.

(And, yes, I do have an adblocker, but I was already passing it along to my circle anyway.)

Soooooooo……..back to that donation: where to send?????? XO ♎

Thanks for the kind words! Your money is no good here, but if you insist in supporting something, please support one of the causes listed on our donation page: http://reviewmeta.com/donate

Where can I find ‘been verified’ or it’s equivalent, on your product? Where can I find the results of clinical trials for your product?

We don’t sell anything.

Is ReviewMeta.com logging all of the websites I visit with my browser? Are you compiling or selling data (including anonymous)? Thank you.

We are only logging the ASIN of some of the products you visit on Amazon – and even then, there is nothing connecting you to that ASIN – we simply are getting an idea of which the most popular products on Amazon are. And we’re not selling this or sharing it with anyone.

I really love this website and the accompanying browser extension. You are doing a huge public service and I can’t thank you enough. Amazon does an extremely poor job of sniffing out fake reviews or they simply don’t care. While furnishing my house I have noticed a ton of products listed near the top that failed your test badly and I would be throwing my money at these dishonest companies for their shoddy products without your service.

Having said that, are there any plans to introduce more of the major online shopping sites? I know Amazon is not the only site experiencing a flood of fake reviews, but without any way to look at the data it’s hard to trust the product ratings on most sites. This actually becomes a major incentive to do my online shopping through Amazon because I can use your research to steer me in the right direction. I’m not a huge fan of this because Amazon isn’t doing anything to make the problem better, they are just large enough that a third party became interested in providing a service to serve their audience.

Anyways, thanks for everything you have done so far! I will make a contribution to the ACLU on your behalf as you requested.

Thanks for the kind words and donation to the ACLU! Initially, I wanted ReviewMeta to analyze reviews on all platforms, but the more I looked at the data, the bigger of a challenge that I realized it would be. For example, if you look at Walmart, you can’t click on a reviewer’s profile and see their previous reviews. There’s really no way to link all the reviews by one reviewer together. So there’s even more opaqueness at Walmart (for example) that makes it much harder to perform our analysis.

Hello GRBaset-

There’s a few major challenges when collecting user information. The first is that keeping it all refreshed all the time exponentially increases our server load. To save on enormous server costs and streamline the time it takes to generate a report, we only update reviewer information intermittently. To that end, you’ll sometimes see reviewer information that is out of date.

The second major challenge is that Amazon has recently changed the way that they display user data. If you visit a profile page, you’ll notice that all the information about the reviewer is loaded through an AJAX request after the page loads, making it much more challenging than it seems to gather this information directly off Amazon.

Hi Nick-

There is no test that fails products “because the reviews are 5-star”.

If you have issue with a particular report, please consider posting an official brand response: https://reviewmeta.com/blog/official-brand-response/

How do you track users on Amazon? Do they have unique public names?

They actually have unique reviewer IDs that you can find in the URL of their profile page.

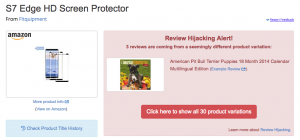

I get tired of looking at reviews on Amazon that are actually not for the product that that review is connected with. Does your analysis take these reviews into consideration?

Yeah, we have a big Hijacking Warning when detected: https://reviewmeta.com/blog/amazon-review-hijacking/

Thanks a ton for ReviewMeta! I almost always use it when shopping, and find it incredibly useful. I particularly like that you show the reasoning behind the analyses (this is why I don’t use FakeSpot, and never will).

One potential further improvement: browsing would be even faster if we could piggyback off of Amazon’s powerful search and filtering tools that would surely not be worth attempting to develop and maintain here. Amazon has an absurd amount of resources to pour into things like letting us look at eg, only dishwasher-safe cups made of plastic, that have stems, under under $40, between 10 and 20 ounces. I and I think tons of others would massively appreciate an extension to directly show ReviewMeta scores on Amazon pages, next to brands and next to items. I’d guess that charging for that extension while keeping the current interface free would be very successful.

This definitely would be an improvement – we’re working on some extra features for our extension and hopefully should have an update soon!

Hi, I am a PhD student researching links between online reviews and actual product quality. I wanted to ask if it is permitted (and under which conditions) to use information from your website for a selected amount of specific products for my (non-commercial) research. Thank you!

Sure, please send us an email: [email protected]

Now that y’all have proper product & user rating, the obvious thing to capitalize on is to flag when a user *changes* their rating/review. (I see a section for deleted reviews, which is kinda the beginning to this w/ verbiage: “the fact that action was taken to delete reviews raises some red flags about the integrity of the reviews for the product as whole.”) Similar description to say changing a review would be more significant, as it’s even more action/effort.

Before launching RM, I was thinking it would be cool to track review edits. I decided to put it on the back burner to focus on what was needed for launch, and never really picked the idea back up. It would be really interesting data to see, especially to get an aggregate picture of whether reviews are changing positively or negatively over time.

That said, the challenges/fears would be: 1 – afraid that there would be practically no products where there’d be any significant data, and 2 – it would require the system to compare reviews and possibly save a copy before overwriting them – requiring a lot more processing power. I’m sure it can be done, but I’d fear that it could cause system slowdowns, more bugs, downtime, etc.

So it’s on the list of “someday ideas” but I can’t say that it will be anytime soon. Always great to hear your thoughts.

If someone hides a review from their profile, it doesn’t get marked as deleted in our system. It’s just not visible on their public profile page, but it’s still visible on the product review page. It’s still public, just not listed on their profile page.

It’s a lot of work to distinguish which reviews have been hidden and which haven’t and keep that constantly updated, so we just stay conservative and not display the list of actual reviews from a reviewer.

How is the avg. review trust determined? I have a score of 64%, even though all my reviews were honest.

Average reviewer trust is the average trust that is calculated for each of the reviewers’ reviews. Keep in mind that our algorithm is just an estimate, so we are not saying that your reviews are fake as a matter of fact: https://reviewmeta.com/blog/shortcomings-of-reviewmeta-com/

Thanks for the interesting read!

I just wondered about this, because on one of my more detailed reviews where I tried to talk about all the relevant aspects (which 7 people found helpful by the way) someone was like: “what does that have to do with a review” and literally called me an idiot.

Of course, this was nagging me. 😉 So I checked his reviews and a lot of them were really unsubstantial like: “fast shipping and really cool” and “I was very pleased”. On one of them, there were comments from people, that bashed him for a really bad review. Anyways…

I found, his score here was 92% even though he has less verified purchases (him 88%, me 91%) and a deleted review (I have none deleted). Of course, now I understand that the data on the profiles here does not represent what goes on in the background. I presume, each review is scored separately (good/bad reviews) and the average is shown on the profile.

I am not able to see, which of my reviews got a bad score, because not all the rated reviews are shown on ReviewMeta and sometimes even no best or worst reviews are displayed. I really hate those reviewers, that do not review the product, but their bad experience with a certain seller on the Amazon Market Place. Therefore I really try to create helpful reviews whenever I can.

This leads me to propose a feature request: I think it could be useful to be able to see all the scanned reviews of a single user on their ReviewMeta-Profile the same way they are shown on product pages with their trust scores. This way, it is easier to see and understand, if a reviewer might be just a fanboy of some brand. Or maybe he always writes in the same fashion and does not really put much effort into their reviews. Also, we could easier identify if a user only reviews certain brands. It basically gives us a better picture of the reviewer, that posted a mistrusted review on a product, we are interested in.

I definitely see how this could be helpful, and would love to implement this feature, but I’m worried about privacy. Amazon allows reviewers to “hide” reviews from their profile page – this is definitely being abused, but it makes sense that reviewers can do this. If they are reviewing adult or sensitive products, they should totally be able to “hide” them from their profile pages. I would fear that we could easily violate the privacy of reviewers by showing all the reviews we’ve picked up for that reviewer.

Hmm. I guess you are right. So, when you hide a review on your Amazon profile, it is still available on the corresponding product’s page? Interesting!

When you hide a review, it will not appear on your profile page, but it will still appear on the product page. So in theory, someone could still find it, but it would be much, much harder to do.

Just heard about you guys on Planet Money. Awesome service and much appreciated! Question though: when trying to refresh reviews for products that haven’t been crawled in a while, what does, “Hmmm, the data wasn’t right.” mean and is there anything I can do to… ya know… make it right(?) so that I can see a more up-to-date analysis of the product?

The “Data isn’t right” message appears when we notice a change in the listing on Amazon. We reset a couple of variables and ask you to refresh the page. If you’re getting the error multiple times in a row on the same product, please let us know the URL and we’ll check into it.

When I try to post a low-star review at Canadian Tire, it always gets rejected, even though other writers manage to post. They seem to have banned all vivid language, not just profanity. I’ve never managed a satisfactory revision, either, so it is too frustrating to bother with.

Ah, I see, need to update the wording there. The profile analysis feature came years after the product analysis, but I never changed the wording to reflect that. I might just keep it hidden as a secret “power user” feature. I’ve got a post coming soon about some of the other easter eggs hidden around the site.

There’re others⁉️ Can’t wait! ? ? Maybe something like https://support.google.com/websearch/answer/35890

Is there a way to check out a particular reviewer? For instance, how do I see how my reviews are rated on your site?

Yes, you can just copy the URL of your public Amazon profile into the ReviewMeta search box and it will bring you to a page that looks like this: https://reviewmeta.com/profile/amazon/alt/AF3RAEQWSGFAWVTKDEFAOGTVL56A

Interesting! I had literally just read that article before I saw this comment. I think this deserves some further analysis.

Fortunately, I stumbled upon your site & have bookmarked it for future. I noticed in your FAQ section you stated ‘we do not like to use ‘fake”, but didn’t list substitute words i.e., fabricated, forged, fictitious, phony, fraudulent, & concocted are a few that came to mind. So I thought I’d ask why & what words did you prefer? I’m not trying to be critical just curious because I think it’s a great site, simple, streamlined, & to the point, Graphics are great too; 5 stars to you!.

Thanks for the comment!

Here’s more info about why we don’t use the word “fake”: https://reviewmeta.com/blog/unnatural-reviews-and-why-we-dont-use-the-word-fake/

From the article: Ultimately we’re thinking in terms of “Natural Vs. Unnatural” rather than “Fake Vs. Real”.

So do answer your question, ReviewMeta.com is really looking for reviews that are Natural vs. Unnatural.

Another RFE: Automatically run @ least preliminary reports for the 4 or 5 Deals of the day (grouping makes that ~15 products) when they are released every morning. For Amazon US, that’s the top section of https://smile.amazon.com/gp/goldbox/

Wow, some of these deals are failing really hard. How do companies get their product to the top of this section? Is it curated or are they just paying Amazon for the promotion?

?♂️ I’m sure they’re paid seller/manufacturer promos, but these items are sent to every Amazon customer (on the main Deals maillist = most registered folks) daily. I myself have received it for years, so it’s the lowest-hanging fruit of all — for 24 hours.

RFE: I think it’d be instructive to be able see the average rating of reviews which share a particular Repeated Phrase.

E.g., I’m checking reviews of a cellphone. If Repeated Phrase “screen clarity” comes up, a star rating average for those reviews of 4.5 vs. 1.5 may say something significant, right?

Absolutely. I think this would be extremely helpful to see and shouldn’t be too hard of a feature to add. I’ll add it to the dev list.

Thanks for taking the suggestion. It’s rather refreshing! ?

Just an update – we’re pushing this feature with the next code deploy (later today). You will only see it on reports that have been updated after the push since we weren’t calculating the data previously. Thanks again for the suggestion!

I really like the implementation — unobtrusive, but clear. ?

When are you planning on supporting Amazon.es? This is the only site of its kind available now, so it would be interesting if you added it as well.

Hopefully soon. The next ones to be added soon should be:

Amazon.es

Amazon.jp

Amazon.in

Amazon.cn

Amazon.com.mx

Amazon.br

Thank you for the reply.

Hello,

Is there a way to analyse a specific reviewer account for authenticity? I don’t know if that service on this site – do you have any recommendations?

Hi Eric-

It’s something that is technically possible but not something that’s offered right now. Currently, we assign the trust to individual reviews, not individual reviewers, but it’s definitely something I’m looking at.

Hey Eric-

I went ahead and launched a beta version of this feature. If you click on a username on any of the example reviews from a report, you can see our summary page about that user: https://reviewmeta.com/profile/amazon/A38MFGPOV52D1J

After testing this for a while I plan to add functionality into the extension + search bar to make it really easy to get the stats on a reviewer.

However, for now, there’s a hack to analyze any reviewer. On a profile page (eg. https://www.amazon.com/gp/profile/amzn1.account.AG4E63CHIS6QCMZUWH4J4FP6SYYA/ref=cm_cr_dp_d_gw_tr?ie=UTF8) grab the ID (everything after the “.account.” and before the slash, so “AG4E63CHIS6QCMZUWH4J4FP6SYYA”). Then type in “http://reviewmeta.com/profile/amazon/alt/” + the ID that you just grabbed, so the link will look like this:

http://reviewmeta.com/profile/amazon/alt/AG4E63CHIS6QCMZUWH4J4FP6SYYA

Done!

I tried it on my profile, & got a 404. Probably it’d be easier if we could just copy/paste the profile URL directly into the ReviewMeta searchbox. If 1 inserts the single review URL, maybe rate both the item & the reviewer.

This is the plan. For now, the feature is in beta testing and will be tweaked and patched before it’s pushed to the mainstream user.

I’ve been reviewing individual sellers on Amazon ever since I starting buying there. Could the framework from reviewer ratings be leveraged into rating sellers? (I’m aware it’s completely different data.) Also, doing such for Amazon itself (but possibly not its Warehouse Deals “sub-seller”) might overwhelm your systems, so I suppose leave them out (I think that’s default for Amazon on itself), but that might be most interesting of all!

Ah, so you’re talking about analyzing the actual reviews on sellers themselves? I thought about that briefly at the onset of the project but haven’t really considered it again until now. I’ll add it to the list of things to ponder – though no promises that I’ll get there.

I think, if you’re able to accomplish it, it’d be the complete experience.

E.g., I do my research on the 5g HD Organic Levitating Inline VR Gizmo (of course, also using RM ?) & now am @ the point of buying. But whom do I buy from? Should I buy from Amazon itself, with its various advantages/disadvantages, vs an offer by ACME Widgets (of Roadrunner fame, naturally ?) that’s 25% less. The seller ratings seem ok (other than some multiple almost-0 ratings from Wile E. Coyote ?, who might just be hangry — how do I tell⁉️), but I’m unsure. If only someone somewhere could tell me!

I think you have a good point – how do we know that ACME Widgets isn’t selling knock-offs? I think analyzing seller reviews could help us with that.

Or they’ve terrible customer service if there’s an issue, or don’t ship timely/properly, or used to be excellent & suddenly, recently, have gone downhill.

We’re pushing an update that will allow you to search users in the search box. It’s still a beta feature so don’t be alarmed if we can’t find the profile based on the copy & pasted link. Should be live in the next few hours.

WFM quite well. Thanks! ?

I remember reading an article that spoke about quantifying the intuition that an item with 3 reviews averaging 5* is likely worse than one that had 1000s of reviews averaging 4.8. I couldn’t find the article, nor do I remember the metric they used (I think it was a lower bound of some interval); but as statisticians I’m sure you guys know of similar measures. Is the reason you don’t include it just because of potential user confusion?

I was using the reviewmeta category browsing and I had to slog though 10s of items with single digit reviews for each item with a “real” (by that measure) high rating. Being able to sort by “real” rating would be super helpful there, and when looking at a particular item (although I would probably have to look at enough items to re-calibrate what a given score meant)

Hello AB-

The categories are already sorted by an algorithm based on the adjusted rating that takes into account the number of reviews – here’s an example category: https://reviewmeta.com/category/amazon/6939045011 – notice how products with a 4.9 adjusted rating are displayed products with a 5.0 adjusted rating?

The challenge here is how many reviews are necessary to justify placing a product with a lower adjusted rating above a product with a higher adjusted rating? Obviously a 4.8 with 1,000’s of reviews should be above a 5.0 with 3 reviews, but what about a 4.9 with 100 reviews? Or a 5.0 with 25 reviews?

After taking a second look at the data, it does seem like we could tweak the ranking algorithm a bit further to place even more value on products that have more reviews.

Hello Ian-

As we clearly state at the top of the page to all new visitors, ReviewMeta.com is an estimate – we don’t claim that our results are 100% perfect. There is no way to “prove” a review is fake, so there’s no real “answer key” to check our data against.

However, there are some data points that might suggest that our estimates carry some merit. I’m planning to publish a blog post with all the data soon, but in general here’s what we’re looking at:

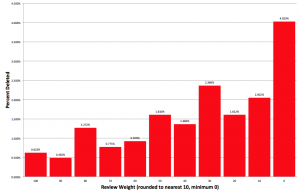

For every review that we collect, we assign a weight. You’ll see this weight on our site under the “Example Reviews” section for any report, listed anywhere between “100% Trust” and “0% Trust”. We can also figure out which reviews get removed from Amazon and call these “Deleted Reviews”. We have millions of deleted reviews in our dataset to look at. We see that the original “weight” we assigned to reviews that end up deleted tend to be much, much lower than other reviews.

So in essence, the reviews which ReviewMeta deems less trustworthy have a much, much higher chance of ending up deleted from Amazon. Since Amazon doesn’t use ReviewMeta to figure out which reviews to delete, it’s a signal that our estimate has some validity.

Again, I’ll be following up with a more detailed blog post that shows much more data so you can see for yourself.

Why has my review of “the myth of an afterlife” been rated a 0% trust rating? I spent a long time on that review, and it is vastly better than any other review of this book, including all the professional reviews.

Given that my review has a 0% trust rating, I question whether your estimates have much merit.

My advice to people is to not bother with the 5 stars ratings, and be cautious with 1 star ratings.

Hi Ian-

We look at a lot of different factors, so without actually knowing which review is yours, it’s tough for me to say. Keep in mind that there are literally billions of data points in the system: the algorithm is an estimate and we’re not claiming that it’s 100% perfect. There are going to be false positives and false negatives.

Here ya go:

https://www.amazon.com/gp/customer-reviews/RUOLM1707T2HJ/ref=cm_cr_arp_d_rvw_ttl?ie=UTF8&ASIN=B00UV3VFW8

Why on earth has it got a 0% trust rating?

I buy a lot of things from Amazon. But I don’t trust the reviews. So I use this site and fakespot. But if both of your sites are saying my reviews are fake, when they are most emphatically not and indeed I generally put a great deal of effort into my reviews, then this makes me question the usefulness of this site and fakespot.

For books I’m just wondering if reading the reviews on goodreads would be better? Also is there any way of getting a figure for the average rating a particular customer gives on Amazon? I think I would like to avoid taking note of customers whose rating average exceeds 4.

Hi Ian-

Looking at the review, I see several flags – you are not a verified purchaser, none of your reviews are verified purchases and there a lot of word-for-word phrases that you use in your review which happen to be in other reviews for the same product.

Furthermore, the reviewers who used these phrases (33% of them) rated the product an average of 2.0 stars while reviewers who did not use these exact repeated phrases rated it 4.5 stars.

Again, ReviewMeta.com is just an estimate, looking at literally billions of data points, and there is no possible way we could get it right every time. It’s always best to read the in-depth report that we have created and verify which reviews you want to trust.

They wouldn’t be verified purchases since they don’t normally deliver to my address since I live in England. And cheaper ordering from Amazon UK since the p&p is less.

The word for word phrases are more likely to be repeated the longer one’s review is. Hence, the low rating reviews tend to be longer with 3 of them utilizing the full 5,000 word limit. I certainly never copied from anyone else, I think my own reviews are vastly better to be tempted to copy!

Also this repetition of word phrases implies one’s review is more likely to be downgraded for being longer!

Sorry, I’m not impressed. You should be more leery of 5 star reviews, but for the reviews of this book the higher ratings with the shorter reviews are getting higher trust rating. Ridiculous.

I’m sorry that you feel that way, but we simply don’t have the resources to go through and manually verify each reviewer’s physical location. Also, we actually do take into account the review length for the Repeated Phrases test, though it seems you didn’t take the time to read our description of that test either:

“We assign each review a score, taking into account factors like word count, number of repeated phrases found and the substantiality of those phrases.”

https://reviewmeta.com/blog/phrase-repetition/

I don’t understand this “repeated phrases” business. Obviously a combination of words might be used by other reviewers. How on earth would this detract from one’s review? I mean, unless you’re actually copying from someone else. But my review does not in the least remotely resemble anyone else’s!

Not sure what is meant by the “substantiality” of a “phrase”. You mean a particular string of words I use do not convey much meaning? An algorithm would not be able to ascertain this, nor would most people come to that!

Speaking just as normal user, Ian, you went out of your way to attempt to make your review seem… different. I’d be cautious/TLDR w/ your review as a human.

Why didn’t you just post all this via your Amazon UK account on the page you bought this? That would’ve cleared most of RM’s red flags, since both your purchase & account would come up verified, & they’d be able to evaluate better.

Don’t understand this. Post all what? And where? What does TLDR w/ mean?

You have an up vote, so someone appears to understand what you mean. Speak English!

I haven’t gone out of my way to make my review different. I wrote a review that was appropriate. I take no note of other peoples’ reviews.

Googled it. If people can’t be pestered to read a 5,000 word review, then a fortiori I’d advise them not to buy this book since it is *vastly* more than 5,000 words. Moreover, the authors continually repeat themselves and the main contributor bangs on *interminably* about the same stuff all the time. My review is fresh, informative, and exciting.

‘Post all this via your Amazon UK account on the page you bought this?’ No idea where you mean I’m supposed to post my comments, and what the heck difference it would make to anything??

From what you said above ↑, you purchased item on Amazon UK, but reviewed on Amazon US, correct? If so, your review’d show up as Unverified Purchase &, presumably, Never Verified, which would automatically downgrade your review substantially.

Another thing, those giving non-serious reviews are scarcely likely to utilize the full 5,000 word limit!

Does anyone have any idea what “overrepresented participation” could possibly mean?

Hello Ian-

Have you read our blog post that explains this test? https://reviewmeta.com/blog/reviewer-participation/

I still have no idea.

It would be nice to have an extension for Mobile Safari.

I agree. We almost completed one but the strict design constraints made the approval process a nightmare and we essentially just gave up. Hopefully we’ll get back on it soon!

Can you please consider supporting Amazon.in also. Thanks!

Hey, what site reviewmeta supports ? Only Amazon.com and Bodybuilding.com ?

As stated on the homepage, we support Amazon.com, Amazon.ca, Amazon.co.uk. Amazon.de, Amazon.it, Amazon.fr, Amazon.com.au and Bodybuilding.com.

Just found the site yesterday and I think it’s great. You folks ever think about offering a subscription service or having a phone app to raise cash? Many ppl would think the service is worth it and gladly pay.

Hello, I’m not sure if I only missed the information but does reviewmeta work independently of the language? I’m especially interested in using it for german reviews and I would expect that it analyses written words so it can’t be used the same way in german, right? Is there a detailed explanation of the 12 tests? Thank you very much

Hello Viola-

Yes, there are only a few tests that analyze the language, and those will be different for the different languages – so when looking at reviews on Amazon.de, we are expecting them to be written in German, and analyze them accordingly.

As far as the detailed explanation for each test, there will be a link at the bottom of each test on any given report that says “Read more about our _____ test here”.

I’ve only done like two amazon reviews. Is “easy grader” bad because it has a red icon?

“Bad” isn’t necessarily the most accurate word right here. It’s more that it’s something to take note of.

Why are the weight bars in different positions for different products?

If you think a certain combination of weight is the best, why don’t you use it ever?

And I’d like to save my personal setting of the weight bars, so I could use as default for every analysis.

Is it possible?

The weighting is based on the severity of the issues discovered with each test. For example, just because a review is unverified doesn’t mean that it is inherently untrustworthy. However, if a large portion of the reviews are unverified, or the unverified reviews are giving the product a significantly different rating, then we’ll reduce the weight those types of reviews hold.

I, too, would like to save my personalized weighting. How do I go about it?

What’s the business model of reviewmeta.com? How do you guys make money?

At the moment, we’re actually losing a little bit of money each month. We have a few ad units but they don’t bring in a whole lot of cash. We’re seeing growth each month so hopefully we’ll tip the scales, but for now, we’re just happy providing the service to the public for free.

Have y’all made it into the black?

I honestly don’t see a problem w/ y’all using Amazon Referal credits, either, just as long as y’all adhere to 2 principles.:

• Consistently apply to ALL Amazon products, whatever their RM score

• Default it on, but, for purists, put a toggle/non-refered version next to each Amazon link to turn it off (I’m sure it could be JavaScript’d/CSS’d) with a mouseover explanation re: needing the unbiased revenue.

The problem is that our site does not comply with the Amazon Affiliate TOS so they won’t even allow us to use it.

What could you possibly run afoul? ? I just found https://affiliate-program.amazon.com/help/operating/agreement & https://affiliate-program.amazon.com/help/operating/policies but, wow, TLDR ?

Yeah, there’s a lot of stuff in there that we could be removed from the program for. It’s a long read for sure.

Specifically, in short? There’s section(s) on review rating⁉️ It must be like a government bill.…

Is there any plan to support Best Buy in the future? Their “tech insider network” is basically a huge incentivized review system and the incentivized reviews are exploding on their site.

At the moment, there is not – you’re the first to mention Best Buy and we’ll have to take a closer look into it soon.

Any update re: Best Buy?

Any update re: Best Buy reviews?

At the moment we’ll be focusing our efforts on integrating other regional Amazon sites such as Mexico, India, Japan, etc. Best Buy is on the list but way on the back burner. I’ll keep you posted if things change.

Is it possible that I download a programm from you, leave my computer on over night and you can use it’s processing power. I really want to help you. Or do you have a donation account?

PS: Your verification mail was in my spam foulder. Therefore it took me a while to find it.

That’s a very generous offer of you! I’ve seen that done on other projects before, however I think that the extra development requirement just to utilize remote CPU’s might not be worth the effort. It’s not that there’s a lack of computing power in the world; it’s more that we’re simply maxing out our two dedicated servers for this project.

We are considering setting up a donation account – gotta talk to the accountant about it.

Was it the disqus email verification in the spam folder?

i would happily donate to this website, it saves me a ton of money on bad products

Thanks!!! https://reviewmeta.com/donate

Consider ko-fi.com, &c, for add’l donations. Many folks can’t/won’t use PayPal.

This is a good idea. Adding it to the list, even though I never feel good about requesting donations. It’s my personal project and I don’t necessarily want to feel like I “owe” anybody anything, even though that’s not how it works. I understand people just want to support the cause so I might as well make it easy for them to do that!

You should consider adding support for google play and the app store

That’s definitely on our radar!