How Accurate is ReviewMeta.com?

This is a question I see frequently asked. Unfortunately I also see it frequently answered by those with opinions based on only a few anecdotal data points.

My answer to this question has always been “I’d like to think the algorithm is pretty accurate, but ultimately there’s no way to verify it“. This is because I believe it’s impossible to determine with 100% accuracy whether a review is fake or not. Sometimes people point out a review and say “this is fake and not getting detected by ReviewMeta”, but you have to wonder: how do they know it’s fake? It’s important to remember the difference between an opinion and a fact here.

The challenge is that there is no “Answer Key”.

We don’t exactly have a reliable answer key with which we can compare our data against. Or at least that’s what I was always telling myself.

What I recently realized is that our deleted review data might give us some interesting insights. It’s not perfect data, but at least it gives us something to work with.

The first thing to do is disclose everything we know about the deleted review data (including the obvious shortcomings).

- We don’t have some secret access channel to view reviews that have been removed from Amazon. We can only mark a review as “deleted” if we collect the review, and then at a later date we see the review is no longer there. So we probably are missing millions of deleted reviews from our dataset, and also have thousands of reviews that aren’t yet marked as deleted in our system even though they have been removed from Amazon since the last time we checked.

- We call them “deleted reviews” but that doesn’t mean that Amazon has actively suppressed them. For example, the author may have removed them, or they might have been removed as a result of an entire account wipe-out.

- Even if Amazon has actively suppressed a review, that doesn’t mean it was fake. Maybe it had inappropriate language, contained personal information or was challenged by the seller.

- Amazon isn’t 100% accurate in their detection methods either. We’ve seen honest users complain about having their reviews deleted before. This goes back to my belief that it’s impossible to determine if a review is fake or not with 100% accuracy – even Amazon cannot do it themselves.

The deleted review data is the closest thing we have to compare.

So hopefully you’re still with me even after I just disclosed all the shortcomings of the data. I’m trying to make sure it’s crystal clear that the data isn’t perfect, however it’s the best we’ve got, so we’ll jump right into it.

We’re going to look only at reviews in our dataset that were posted to Amazon sometime in 2017. I chose this date range for a few reasons:

- I wanted to allow enough time to have passed for us to pick up a sufficient amount of deleted reviews. Since 2017 ended four and a half months ago, I figure that’s enough time to pick up some good data.

- I didn’t want to include the millions of deleted incentivized reviews in this analysis. After Amazon banned incentivized reviews on October 3, 2016, they deleted millions of pre-existing incentivized reviews. I didn’t want that skewing our results.

Non-Deleted Reviews: 21,078,110

Deleted Reviews: 255,956

Total Reviews in Dataset: 21,334,066

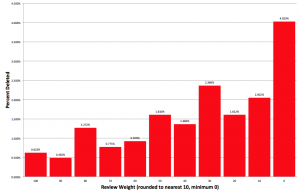

Comparing Deleted Reviews with Review Weight.

For every review that we analyze, we assign a weight. Every review starts with weight=100 and may lose points for failing different tests. Internally, reviews can be well below a weight of 0, however the lowest score we will display is 0.

On our reports, you can see this number listed as “TRUST”:

If we notice that a review is missing, we do not update the weight. We only update the weight of reviews that are visible on Amazon. So once we mark a review as “deleted”, we can still see what our weight calculation was for that review before it was deleted.

Looking at the average weight for the Deleted and Non-Deleted reviews in the above mentioned dataset, we see that the reviews which ultimately ended up deleted were rated as MUCH less trustworthy by our algorithm:

Average Weight of Non-Deleted Reviews: 62

Average Weight of Deleted Reviews: 4

Hurrah! This result is huge! There is a substantial amount of correlation between our algorithm and Amazon’s own efforts. Seems we’re on to something here.

Let’s dive further into the details.

Below is a distribution of review weights (rounded to the nearest ten with a minimum of 0). As you can see, reviews that receive a weight of 0 or below are 6x more likely to end up deleted from Amazon as reviews that have a weight of 95 or better.

The data clearly shows that we’re definitely on to something (yet still not perfect). As already described in detail, the answer key we’re using (the deleted review data) is far from perfect, but at least this shows there’s a solid connection between our estimate and what ends up being removed from Amazon.

So the next time someone asks “Is ReviewMeta accurate?”, you can confidently answer “I think they’re on to something!”.

Please keep in mind that every single comment that is made must be manually approved. It actually says that immediately when you post a comment, but it doesn’t seem like you spend a lot of time reading before making comments anyway.

https://uploads.disquscdn.com/images/0fb2a6e7c7f9437fbec63acb1f7d502ba2a8a6bffdbddfd5f3d5c575d4ecdadf.png

Your statements are contradictory: “I have nothing to gain by making this statement” and “I just checked several of our products, a few even came up as fail”. Keep in mind that our tool is an estimate, and is very clearly labeled as such.

Thanks for this … good answer to the question.

This is great. Considering the mentioned weaknesses of the data (the deleted reviews), the level of correlation is significant between what Amazon has actually deleted and what ReviewMeta flags as untrustworthy. Great work. I’m a fan.

I think you’re accurate with books that have +10 – 15 reviews. If you have less than that, in my view, less weight should be given to the possibility of cheating (on the simple argument that there’s minimal reason to cheat for such meager returns).

My big question is how books that fail suddenly pass without any change in the reviews. I witnessed that with more than one book, and it I don’t think that looks good at all for RM. One example (which I won’t list here) had a pass, then a fail, and then a pass. (It’s a fail, trust me! Amateurish writing, cover, and title with a 1000+ reviews and a weirdly enduring high rank.)

The more reviews, the better ReviewMeta works. If there’s less than 10 reviews it’s hard for the report to find “compelling” evidence of something unnatural going on.

As far as the reports changing, I believe it has to do with our preliminary vs full report. We’re cutting corners by sampling the data so that we can generate a preliminary report in under a minute. Our tolerances are a bit higher since we’re only sampling, so more products can slip by with a pass. Running the full report is always a good idea to make sure that our numbers are solid.

Correlating to deleted reviews was inspired.