How it Works

Watch the video above for a quick overview of ReviewMeta.com.

Also, check out the Planet Money podcast (19:31) that features Tommy Noonan and the origin of ReviewMeta:

…so Tommy Noonan went back to work and, in essence, he coded a version of himself, an Internet review detective that can help any of us pull up an item on Amazon and cut through all the B.S. reviews to find just the good ones. He started a new website that he called reviewmeta.com.

– Planet Money Podcast: Episode 850: The Fake Review Hunter (6/27/2018)

Review platforms like Amazon and Bodybuilding.com have done an amazing job at gathering hundreds of millions of datapoints of customer feedback on the products that they sell. They are an absolute necessity in helping customers navigate the millions of products available to them. Unfortunately, many brands have chosen to abuse the platforms that were created to help customers, flooding them with low quality and biased reviews to try and boost their own profits.

Everyone has their own method of quickly investigating a product’s reviews to check if they are legit or not. You might read the most helpful review, then see what the negative reviewers are saying, scan a few more reviews then ultimately go with your gut feeling.

At ReviewMeta we are also investigating product reviews – but our methods don’t rely on gut feelings. We leverage algorithms and data science and have the ability to scan thousands of reviews and identify unnatural patterns in just seconds.

Here’s a bit more about how our system works:

First, we gather review data.

We gather publicly available review data from platforms such as Amazon and Bodybuilding.com. Not only are we collecting the set of reviews for a given product, we’re also looking at the reviewers who are behind those reviews. Even the most critical readers will not likely spend hours tallying up statistics on every reviewer for a product. At ReviewMeta, we automatically check all available data to give you a complete picture of what’s really going on.

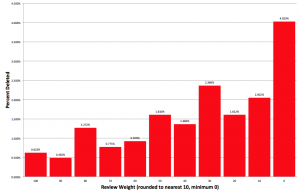

Next, we run the data through our analyzer.

Once we have all the data about a product, we perform an analysis and run twelve different tests. We’re looking at every single product in the same way – we don’t do any favors for anyone or make any individual judgement calls. We use statistical modeling on any suspicious patterns we detect in order to rule out the possibility they are due to random chance. You can read more about our statistical modeling here.

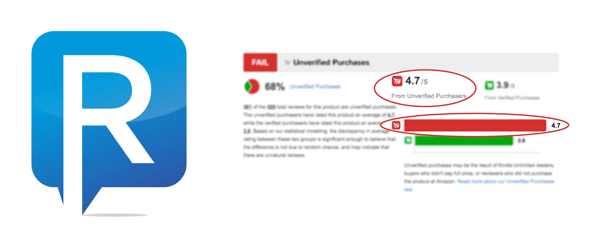

Last, we display a report that any human can easily understand.

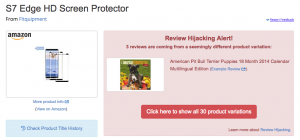

We present our findings in a transparent and easy to understand format that helps you see how we arrived at our conclusions. Here’s an overview of some of the features you’ll see:

Pass/Warn/Fail

This is the one word answer to the question “well, how do the reviews look?”.

ReviewMeta Adjusted Rating

The ReviewMeta Adjusted Rating helps you cut through the noise of biased reviews and lets you know what the honest reviewers are actually saying. We re-calculate the average rating based only on the reviews we’ve determined to be more trustworthy. This gives you a quick and easy estimate to see how unnatural reviews might be affecting a product’s overall rating. Read more about the methodology of the ReviewMeta Adjusted Rating here.

Most/Least Trusted Reviews

Chances are, you’re already trying to identify and read the most honest reviews. We’re saving you the time of digging around and showing you our top ten (or fewer) most trusted and least trusted reviews.

Analysis Details

This is the geeky part where we go into more depth about every single one of our twelve tests. This points out exactly which patterns we have discovered in the reviews, links you to examples, and shows you exactly why we think it’s suspicious (or not!).

What’s next?

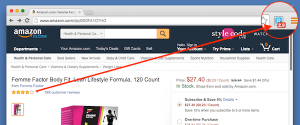

If you haven’t done so already, make sure to grab our browser plugin. This displays a product’s analysis results and adjusted rating directly in your browser as you shop! You won’t have to leave Amazon or Bodybuilding.com to check our analysis of the reviews.

If you don’t feel ready to commit to a browser plugin you can also copy and paste product URL into our search bar and it will pull up the report for that product directly!

More questions?

There’s lots to learn about ReviewMeta and we’re excited to share it! Check out our FAQ here, or drop us a note directly.

Great idea and implementation! I haven’t been amazon customer for a while and started using it again – needed to get a few things after I’ve moved.

Reviews became a total mess: I see 4.5-5 star reviews, but once I look through 10% of the reviews are 1 star…

Is there a way to make it easy for users to report fake reviews? (I assume that would potentially improve quality of amazon reviews or they would get tired of dealing with it and stop showing unverified reviews.

Since we’re not affiliated with Amazon, we can’t change the way reviews are reported, but we did write up a guide to fighting back: https://reviewmeta.com/blog/how-to-fight-back-against-fake-reviews-on-amazon/

Thank you for your awesome work. I’m a french blogger specialized in

the topic of fake reviews and I have already recommended your tool

several times to my readers.

Do you plan to release the

source code publicly ? At least the grading algorithm if you don’t want

to release the Amazon scrapper (in case you’re in an arms race against

Amazon to scrap the reviews). It would give a lot of insights on how to

manually spot fake reviews on websites not supported by ReviewMeta.

Moreover, the community would be able to write custom scrappers and plug

them into the official ReviewMeta grading algorithm. I think this would

be an awesome move from your team !

Stay strong in these troubled times

Hello –

Thanks for the kind words! We don’t plan to release the source code to the site, but we try to be as transparent as possible by showing as much work as we can in each of our reports. Be sure to check this out to make sure you’re getting the most out of RM: https://reviewmeta.com/blog/reviewmetas-hidden-features-for-power-users/

how do u get the review data? is there a public api from amazon to use?

We don’t use the API as it won’t return review data. We simply visit the URLs just like any human would.

looks awesome. In the case of variants will it end up with a revised count number of reviews for each variant?

Yes, click on the “See all variations” link in the info box below the report.

Hi there! First: thanks for your nice work!

My question: Youre writing about twelve different analysis tests. But I just recognized eleven (Deleted Reviews, Unverified P., Suspicious R., Phrase Repetition, Overlapping R.H., Reviewer Participation, Rating Trend, Word Count C., Reviewer Ease, Brand Repeats, and Incentivized R.) – which one did I miss?

Thanks so much for your answer and sorry for my english. 😉

KingCookie

Hello-

We’ve removed the Skewed Helpfulness test since Amazon decided to limit the data being displayed about helpfulness votes: https://reviewmeta.com/blog/skewed-helpfulness/

Thanks so much!

I’m confused. I would usually view 4.7 out of 5.0 as positive. Does a 4.7 out of 5.0 in a category which is labeled F, does that mean 4.7 out of 5.0 reviews are considered unreliable?

I’m not sure that I understand your question. We do not label categories as “F”.

Hi @AuntBalloon:disqus : Yes, 4.7/5 is a very positive review. The question is whether those reviews were real. In the example above, ReviewMeta found that 68% of the reviews were unverified purchasers, and that verified purchasers gave much lower reviews, averaging 3.9. The difference was big enough to “Fail” the original review score: it looks like the ballot box was stuffed. The corrected score was about 3.9.

So, I have a question about how the report works. The pass/fail rating only tells you that there is a significant number of fake ratings? If the adjusted rating based on the remaining reviews is high, say 4.7, it is probably still a decent product?

For starters, we don’t use the word “fake” to describe the reviews we’re looking for here: https://reviewmeta.com/blog/unnatural-reviews-and-why-we-dont-use-the-word-fake/

The adjusted rating gives you a picture of what the natural-appearing reviews are saying about the product: https://reviewmeta.com/blog/reviewmeta-adjusted-rating/

I appreciate the reply. I just question the significance of a pass/fail rating. As you said, the adjusted rating gives you a picture of the natural reviews. I assume the p/f score is based on a ratio of unnatural to natural reviews. So, if there were 100 reviews, but they end up with an avg of 5 based off of 20 reviews, one might be swayed by the smaller sample size.

That’s about how it works – it’s based on how much the adjusted rating and review count differ from the original. Trying to summarize the entire report in one single word can be a little tough so it’s always good to check the full report to see what’s going on