0% Unnatural Reviews? August, 2019 Algo Updates Explained

A few weeks ago we pushed an algorithm update that had been in the works for some time. Essentially we tweaked the way that the algorithm calculates the adjusted rating, adjusted review count and the overall PASS/FAIL/WARN result. Everything else before that is almost exactly the same as before.

Just as always, we’re first using the various tests to calculate a “Trust” percentage for every single review. This “Trust” (sometimes called a weight) is between 100% and 0%. Nothing has changed in this regard. However, the way we use these weights is now different.

Previous Approach: A Weighted Average

We previously used a weighted average to come up with the adjusted rating and adjusted review count. So, for example, if you had 100 reviews, all with a Trust of 95%, the adjusted review count would show 95 reviews and tell you that 5% of reviews were removed.

We’ve realized that this could be somewhat misleading. In the example above, every review is rather trustworthy. It’s not fair to say that we’ve removed 5% of reviews in this case, because all of them look pretty good. Showing that 0% of reviews were removed and saying that we didn’t find anything suspicious is a bit more fair.

New Approach: It’s Now Binary

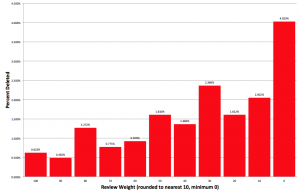

We’ve decided that instead of partially counting some reviews, it’s better to entirely count or completely throw out each review. So in the example above (every review has 95% trust), you’d now see that 0% of unnatural reviews have been removed and the adjusted review count is still 100 reviews.

The line is drawn at 20%. Any review with 20% Trust or higher will be fully included in the adjusted rating and review count, and any review with less than 20% Trust will be thrown out entirely.

How is it possible to have 0% unnatural reviews for a product?

We’ve seen this question asked several times since pushing the new algorithm and I think it’s being asked people who are accustomed to seeing every report showing at least a few % of potentially unnatural reviews removed – however, it’s important to think about this from the perspective of someone who has never used ReviewMeta before. Doesn’t it seem likely that many listings on Amazon have nothing wrong with them? Surely sellers aren’t manipulating the reviews on every last listing – so the ReviewMeta reports should reflect that. If we don’t have sufficient reason to believe there is something suspicious going on with a listing, we shouldn’t be telling people that we’re throwing out reviews.

Remember that ReviewMeta is an estimate, and you can still pop the hood and turn the knobs yourself.

It’s important to always keep in mind that what we’re doing here isn’t magic. We don’t have a superpower that shows us the truth behind every review. What we’re doing (and what any fake review detector – including Amazon is doing) is a sophisticated estimate. We can’t guarantee that we’re always going to get every last report perfectly correct.

Furthermore, you should remember that ReviewMeta is a tool, and there’s a lot of hidden features to get the most out of our site – see our “Hidden” features for Power Users.

Not sure if you have done it yet but could you do an annual, biggest review discrepancy? I’m interested in seeing how much the RM vs Amazon score can differ.

We often see adjusted review counts at 0, meaning we’re throwing out all the reviews, but these often get deleted from Amazon rather quickly. To see a list of all the latest reports, check out https://reviewmeta.com/best-worst

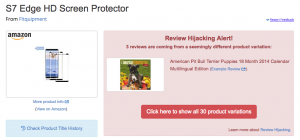

Thank you for the reply! This is perfect, also wanted to know if you have been noticing the trend where a product seems to have multiple reviews for multiple products and not the current product. For example, Bose ear pad replacements, cables and cases all under one product.

Yup, this is called Review Hijacking and we’ve been monitoring it for over a year now: https://reviewmeta.com/blog/amazon-review-hijacking/

Also check out the #StopReviewHijacking hashtag on Twitter.