ReviewMeta Analysis Test: Brand Repeats

At ReviewMeta we like to look for clues of unnatural reviews in ways that would take the average reader too long to discover on their own. Our Brand Repeats test analyzes the relationship a reviewer might have to the brand being reviewed. We do this by looking at their review history and counting the number of times they have reviewed other products by this same brand.

We have developed three groups of Brand Repeats. The multi-tiered system allows us to see a very nuanced picture of the intensity of the reviewers’ relationship with the brand they are reviewing.

- Brand Repeaters have reviewed more than one product by this brand.

- Brand Loyalists have written multiple reviews, of which 50% or more are for this brand.

- Brand Monogamists have reviewed multiple products but all of them are for this brand.

As you might have noticed, our groups of Brand Repeats work in an inclusive manner. All Brand Monogamists are also Brand Loyalists and all Brand Loyalists are Brand Repeaters. Brand Repeaters are the most exhaustive group.

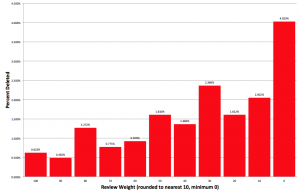

An example distribution

If a product has a high number of Brand Repeats it can be caused by several factors. Sometimes this may be due to the nature of the brand: a brand that is highly prolific or produces products that are often used in conjunction with each other can naturally produce higher numbers of Brand Repeats. However, high levels of Brand Repeats are more often caused by factors that will introduce bias.

Brands with a loyal following may have fans who review every single one of their products. While this seems like a totally normal source of reviews, having a disproportional amount of reviews written by die-hard fans can skew the rating. Loyal fans of the brand may not be reviewing the product with a neutral mindset; their loyalty and past relationship with the brand may positively influence their reviews.

Some brands will even entice their customers to post reviews by offering discounts or other perks to reviewers. They will often build a list of customers who review them positively and call on this same list to write reviews on all their products. Since the brand controls this list, it’s easy for them to remove anyone who doesn’t post reviews to their liking, effectively cutting off their supply of new products if the reviewer is “too critical”. This creates an obvious bias – the brand is able to get a disproportional amount of reviews from die-hard fans who consistently write them perfect reviews in order to preserve their lifeline of free stuff.

Lastly, and most maliciously, some brands will create sockpuppet accounts and write positive reviews for all of their products. These reviews are not just biased, they are fraudulent. We’ve seen accounts created that have 60+ reviews, all for one brand, all with perfect scores.

It’s almost impossible to determine the actual cause of brand repeats for a particular product. It could be due to a loyal following, highly targeted reviewer list, fraudulent sockpuppet accounts, or a combination of different factors. Despite not being able to pinpoint their exact cause, we can still measure their impact on the overall rating.

In order to see if Brand Repeats are benign or not we do the following for each group (Brand Repeaters, Brand Loyalists and Brand Monogamists):

First, we check the overall percentage of the group. While it’s perfectly normal to see a small percentage of each group of Brand Repeats, an excessive amount can trigger a warning or failure. Next we check to see if any group of Brand Repeats has a higher average rating than all other reviews. If they do, we’ll check to see if this discrepancy is statistically significant. This means that we run the data through an equation that takes into account the total number of reviews along with the variance of the individual ratings and tells us if the discrepancy is more than just the result of random chance.(You can read more about our statistical significance tests here). If a group of Brand Repeats are giving the product a statistically significant higher rating, it is strongly supports that the reviewers in that group are not benign and are unfairly inflating the overall product rating.

Do brand repeats have a statistically significant effect in marketplaces that offer their own brands as well as third-party brands? Specifically, it seems unlikely that repeat reviewers for Amazon Basics products are particularly biased, just because that product line happens to be naturally pushed by Amazon.

We are seeing that a lot of the brand repeats do have a statistically significant impact on the reviews. It’s not always perfect, but that’s one of the reasons we have 12 tests. Here’s a shining example of brand repeats that really skew the rating: http://reviewmeta.com/bodybuilding/fitmiss/tone