ReviewMeta Analysis Test: Word Count Comparison

Review manipulation often leaves behind trails of evidence that may be nearly impossible for the average reader to detect, but are easy for our algorithms to pick up on. Here at ReviewMeta, our Word Count Comparison test examines the word count of every review and helps us identify unnatural patterns. While individual reviews come in all shapes and sizes regardless of bias, we have built this test specifically to analyze aggregate data, not just individual word counts. By doing this, certain patterns begin to emerge that help us identify groups of reviews that may have been created unnaturally.

If reviews were created completely randomly, in a perfect world scenario, we’d expect to see a pretty predictable distribution of word counts: some long, some short and some in between. However, if we see a larger proportion of some word counts than normal, this might indicate unnatural reviews.

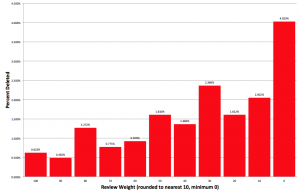

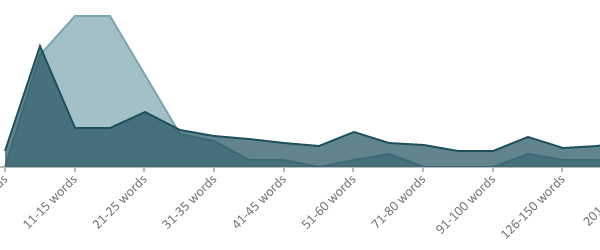

To build our word count distribution, we start by putting every single review for a product into a “word count group”. For example, a 23 word review would fall into the “21-25 word count group”, a 109 word review would fall into the “101-125 word count group”, and a 600 word review would fall into the “201+ word count group”. This gives us the product’s word count distribution. But just a product’s word count distribution doesn’t really tell us that much: we need something to compare it to. That is why we grab the word count distribution for all of the reviews in the products category to get the expected word count distribution.

Once we have the word count distribution of the product and the expected distribution of the category we compare the two distributions and identify product word count groups that are higher in concentration than we’d expect to see. For each of the larger groups we run a significance test to ensure that it isn’t due to random chance or lack of data points but rather that they are substantially overrepresented. If a product doesn’t have that many reviews, we are likely to see more variance due to random chance. However, if our formula determines the difference is statistically significant, we’ll label that group as an Overrepresented Word Count Group.

There are many perfectly reasonable explanations for why a product would have a word count distribution that is vastly different from what we’d expect. For example, a product might be highly controversial or very complex, resulting in an abundance of reviews in the 201+ word count range. Or a product might be so simple that it either works or it doesn’t. How many words could one possibly use to describe a USB cable?

This why we compare the product’s word count distribution to that of the category, and not every single review for every product on the reviewing platform. The expected word count distribution for romance novels is going to be vastly different than what we’d expect for printer paper, so we have to model a different expectation for each category of products.

If a product has any Overrepresented Word Count Groups, we can assume there’s some unnatural forces at work. These factors range from benign to malicious, but all of them can cause the rating to be skewed:

- The brand may be calling on their loyal fans or offering incentives for reviews. If you’re going to write a review as a favor, you’re probably going to write more than just a few words, but also not take the time to compose an essay. This could result in a spike of word counts in the middle of the distribution.

- The brand may be calling on “professional reviewers” who specialize in writing long and seemingly honest reviews. This may result in a spike in the longer word count groups.

- The brand may be manufacturing reviews. Whether they are doing it themselves, or using a 3rd party service to do this for them, it can have the same result. Typically manufactured reviews will re-hash the same few key points over and over again, or even keep the reviews extra brief to save time, however they are still leaving behind a trail of evidence that is easily identified by us in the Overrepresented Word Count Groups.

Finally, if we find any Overrepresented Word Count Groups, we’ll list each one in the report, and add them up to see what percent of the product’s reviews are in these word count groups. While it isn’t uncommon to see a small percentage of reviews in Overrepresented Word Count Groups, an excessively high number can trigger a warning or failure of this test. Furthermore, if the average rating from the reviews in Overrepresented Word Count Groups is higher than the average rating from all other reviews, we will check to see if this discrepancy is statistically significant. This means that we run the data through an equation that takes into account the total number of reviews along with the variance of the individual ratings and tells us if the discrepancy is more than just the result of random chance. (You can read more about our statistical significance tests here). If reviews in Overrepresented Word Count Groups have a significantly higher average rating than all other reviews, it’s a strong indicator that these reviewers aren’t evaluating the product from a neutral mindset, and are unfairly inflating the overall product rating.