ReviewMeta Analysis Test: Reviewer Ease

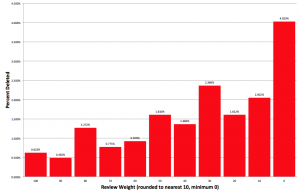

One of the fundamental shortcomings of a consumer reviewing platform is that some reviewers are much easier to please than others. At ReviewMeta we have developed the Reviewer Ease test to combat this drawback. To measure a reviewers’ easiness to please we look at all of their past reviews and calculate their average rating. This is called the Ease Score. The higher a reviewers’ Ease Score is, the easier they are to please.

The Ease Score is essential when we compare individual reviewers. If Reviewer A has an Ease Score of 3.3 and rates a product a 5, it packs a bigger punch than Reviewer B who has an Ease Score of 5.0 and rates a product a 5. Reviewer B is always pleased and consistently uncritical; they have only given perfect scores, whereas Reviewer A is much more conservative with their rating. But we aren’t just comparing two reviewers. We compare each reviewer of a given product to the average Ease Score for all of the reviewers in that product’s category. Any reviewer whose Ease Score is higher than the category average is labeled as an Easy Grader.

But the real meat and potatoes of our analysis happens when we look at the average Ease Score for all reviewers of a single product. The concept is the same as when we analyze individual reviewers. If, for example, the average Ease Score for a specific product is 5.0, this would mean that the reviewers for this product only give out 5-star ratings. Obviously the 5-star rating for a product is much less meaningful when you know that these reviewers give everything a 5-star rating.

However, just a product’s raw average Ease Score alone doesn’t tell us enough to make meaningful conclusions; we need something to compare it to. So we grab the average Ease Score for all reviewers in this product’s category. If a product’s average Ease Score is higher than the category average, we run a series of statistical checks to see if the difference is statistically significant or not. This means that we run the data through an equation that takes into account the total number of reviews along with the variance of the individual Ease Scores and tells us if the discrepancy is more than just the result of random chance. (You can read more about our statistical significance tests here). If the difference is significant we can say that these reviewers are easier to please than the category average.

There are a lot of reasons that a product’s average Ease Score might be higher than the category average. This can range from straight up fraud to a innocent selection bias, but the net result is essentially the same – the reviewers for one product are less choosy than others.

Love this. Thanks.

You guys are amazing. Thanks for the explanation and the awesome tool!